The Graphics community have been searching for ways to prevent the Uncanny Valley phenomenon with human avatars. This lays the foundation for a human-to-human interaction study on mirror neurons. However, this initial stages focus more on proof of concept, to see how I can take real people's scanned models and integrate face and body motion capture.

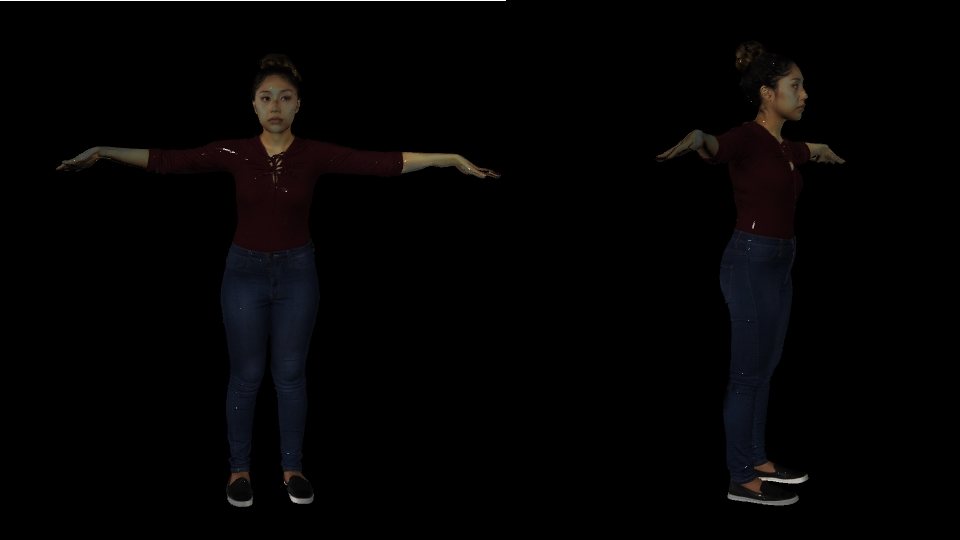

I used the Eva Lite 3D scanner to create a person's whole body in 3D. Unfortunately, Unity has a limit to how many polygons are associated with an animation, so I had to cut the model down by 1000% (not exact). Another difficulty here was that the scanned model's texture was not mapped efficiently, something I had to be constantly aware about.

Next, I used the OptiTrack cameras to get their natural body movements in motion capture. As an initial database, we used a previously published database for positive, negative, and neutral gestures. Not until after this database was made did we decide to include face motion capture. I used a separate process via Autodeks MatchMover. This separated databases of face and movement was actually beneficial in order to create different combinations of whole body gestures.

The final 4 animated models were imported into Unity and can be played on the HTC Vive. This workflow is still currently being updated for efficiency, but hopefully you can learn a lot from the experience in my tutorials.