[ External Sensors ]

VR Network Concurrency

Utilizing the Unity UNet or Optitrack NatNet SDK, I connected VR applications to a desktop computer to streamline data sharing. For example, I created an app sends live mocap to a Mecanim-based model.

Untethered Mobile Player Tracking

I collaborated with an international studio to implement live player tracking. We used OptiTrack Motion Capture sensors to pass marker coordinates to the Gear VR.

Mobile Handheld Controllers

I created a mobile VR controller experience for sedentary patients. We studied the spatial navigation memory of patients in bed.

Eye Tracking

An integral part to learning and memory is where a person looks. Using an eye tracking Gear VR SDK, I integrated user interface and outputted the information for further analysis.

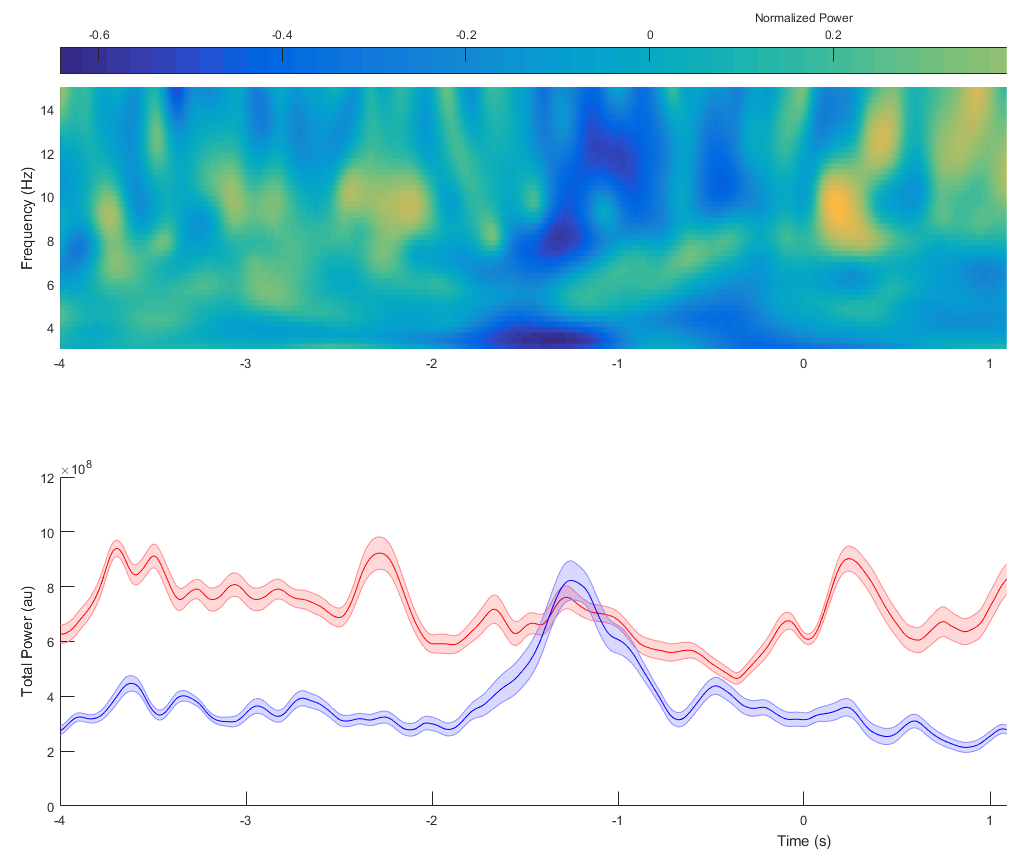

Output Electrophysiology and Behavioral Data

I gather information from the VR app related to their behavior, such as distance and speed. I then compare the files to the corresponding participants' iEEG.